IBM desarrolla interesantes aplicaciones basadas en inteligencia artificial para vídeo en nube

La tecnología Watson permitirá analizar en tiempo casi real la reacción del público en las redes sociales ante un evento en vivo o detectar una escena basada en el contenido de vídeo y permitir la segmentación según los hábitos de consumo de los usuarios en las diferentes plataformas.

IBM está implementando nuevos servicios de vídeo en nube basados en la tecnología de inteligencia artificial Watson de cara a ayudar a entregar experiencias de visualización personalizadas y diferenciadas a los consumidores.

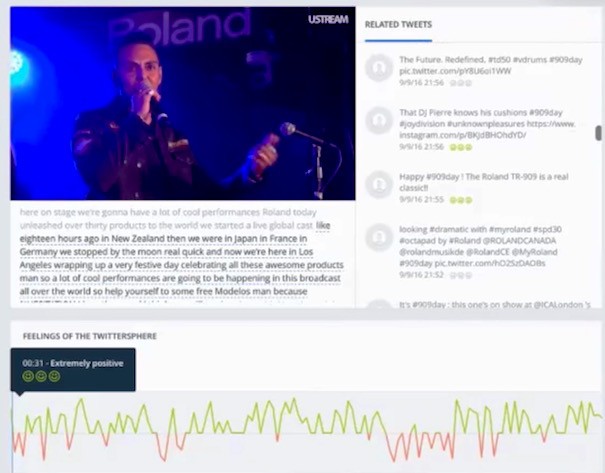

Accesibles a través de IBM Cloud API Watson, por ejemplo, será posible realizar un análisis en tiempo casi real de la reacción del público en las redes sociales ante un evento en vivo o detectar una escena basada en el contenido de vídeo y permitir la segmentación según los hábitos de consumo de los usuarios en las diferentes plataformas.

Braxton Jarratt, director general de IBM Video Cloud, destaca que “las empresas están creando vídeos que generan una gran cantidad de datos valiosos, pero aún no tienen formas de identificar fácilmente esta información o la reacción del público a ciertos tipos de contenido. Hoy en día, con estos nuevos servicios y las capacidades cognitivas de nuestra tecnología, IBM puede ayudar a las empresas a obtener información relevante acerca de los vídeos y su público para que puedan crear y evaluar el contenido más específico para audiencias específicas”.

IBM asegura que las APIs Speech to Text y AlchemyLanguage pueden emplearse para controlar la reacción de los espectadores “palabra por palabra” en las redes sociales cuando un evento en directo está sucediendo. En el lanzamiento de un producto, por ejemplo, la participación del espectador puede aumentar o disminuir cuando se muestren las características específicas, proporcionando información valiosa de los aspectos que se consideran importantes y debe darse prioridad en el futuro.

En cuanto a la detección de escenas en un vídeo, la tecnología actual disponible ahora permite la segmentación automática según los cambios visuales en el vídeo. Entender el contenido y separarlo por temas y temas todavía tienen que ser un proceso manual realizado por una persona. Con Watson, IBM planea ofrecer una solución que “entiende la semántica y los patrones de lenguaje e imagen, lo que permite la identificación de conceptos de alto nivel como cuando una película o un programa cambia de tema”.

Según la empresa, un importante proveedor de contenido ya está probando el servicio como una forma de mejorar la clasificación de los vídeos, la indexación de los capítulos específicos y búsqueda de contenido relevante de sus clientes.

Media Insights Platform

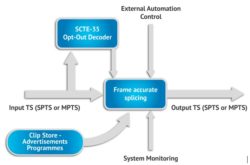

Por otra parte, la plataforma Media Insights Platform está ya siendo incorporada a los gestores de catálogo, logística y suscriptores ya existentes en IBM Cloud, lo que permite lograr un mayor detalle en los hábitos de visionado de consumo existentes.

El nuevo servicio, previsto para su lanzamiento a finales de este año, está diseñado para utilizar la plataforma de medios Insights para analizar el comportamiento de visualización de vídeo y medios de comunicación social, la identificación de patrones complejos que se pueden utilizar para ayudar a mejorar el emparejamiento de contenido y encontrar nuevos espectadores interesados en el contenido existente. Media Insights Platform utiliza múltiples APIs Watson, incluyendo Speech to Text, AlchemyLanguage, Tone Analyzer y Personality Insights.

Con el fin de demostrar las capacidades de su sistema de inteligencia artificial, IBM, en asociación con 20th Century Fox, ha desarrollado un “trailer cognitivo” para la película de terror Morgan. Para esto, Watson ha analizado y aprendido de más de un centenar de trailers de películas de terror.

IBM también ha trabajado este año en el Open de Tenis de Estados Unidos para convertir los comentarios de texto con mayor precisión gracias a que Watson aprendió la terminología de tenis y los nombres de los jugadores antes del torneo.

https://www.youtube.com/watch?v=U-c0jTwxG-0

Did you like this article?

Subscribe to our NEWSLETTER and you won't miss anything.